How to Loop inside Pentaho Data Integration Transformation Ask Question Asked 4 years, 5 months ago Modified 4 years, 5 months ago Viewed 3k times 0 I have following pdi job structure: START ---> TR1 ---> TR2 ---> TR3 where: TR1 will return 3 rows, TR2 had execute every input row enabled by me, and will return 5 rows, 1 You need to pass 3 rows, each with 1 field, instead of a single row with 3 fields. The number of fields must match the number of parameters of your query. So, in short, transpose your data. Either: read line as a single field then use Split field to rows or read as now and use Row normalizer Both approaches should work. Share Follow

How to run Pentaho transformations in parallel and limit executors

The Job Executor is a PDI step that allows you to execute a Job several times simulating a loop. The executor receives a dataset, and then executes the Job once for each row or a set of rows of the incoming dataset. To understand how this works, we will build a very simple example. The Transformation Executor step allows you to execute a Pentaho Data Integration (PDI) transformation. It is similar to the Job Executor step, but works with transformations. Depending on your data transformation needs, the Transformation Executor step can be set up to function in any of the following ways: Using Transformation executors The Transformation Executor is a PDI step that allows you to execute a Transformation several times simulating a loop. The executor receives a dataset, and then executes. - Selection from Learning Pentaho Data Integration 8 CE - Third Edition [Book] Allowing loops in transformations may result in endless loops and other problems. Loops are allowed in jobs because Spoon executes job entries sequentially. Make sure you don't build endless loops. This job entry can help you exit closed loops based on the number of times a job entry was executed. Mixing Rows-Trap Detector

data integration Loops in Pentaho is this transformation looping

Transformation Steps. A step is one part of a transformation. Steps can provide you with a wide range of functionality ranging from reading text-files to implementing slowly changing dimensions. This chapter describes various step settings followed by a detailed description of available step types. The following topics are covered in this. Pentaho Data Integration (PDI) is an extract, transform, and load (ETL) solution that uses an innovative metadata-driven approach. PDI includes the DI Server, a design tool, three utilities, and several plugins. You can download the Pentaho from URL:- https://sourceforge.net/projects/pentaho/ Uses of Pentaho Data Integration Click the Open file icon in the toolbar. Hold down the CTRLO keys. Select the file from the Open window, then click Open. The Open window closes when your transformation appears in the canvas. In the Pentaho Repository Follow these instructions to access a transformation in the Pentaho Repository. As you build a transformation, you may notice a sequence of steps you want to repeat. This sequence can be turned into a mapping. Just like the Mapping step, you can use the Simple Mapping (sub-transformation) step to turn the repetitive, re-usable part of a transformation into a mapping.. Compared to the Mapping step, the Simple Mapping (sub-transformation) step accepts one and only one input.

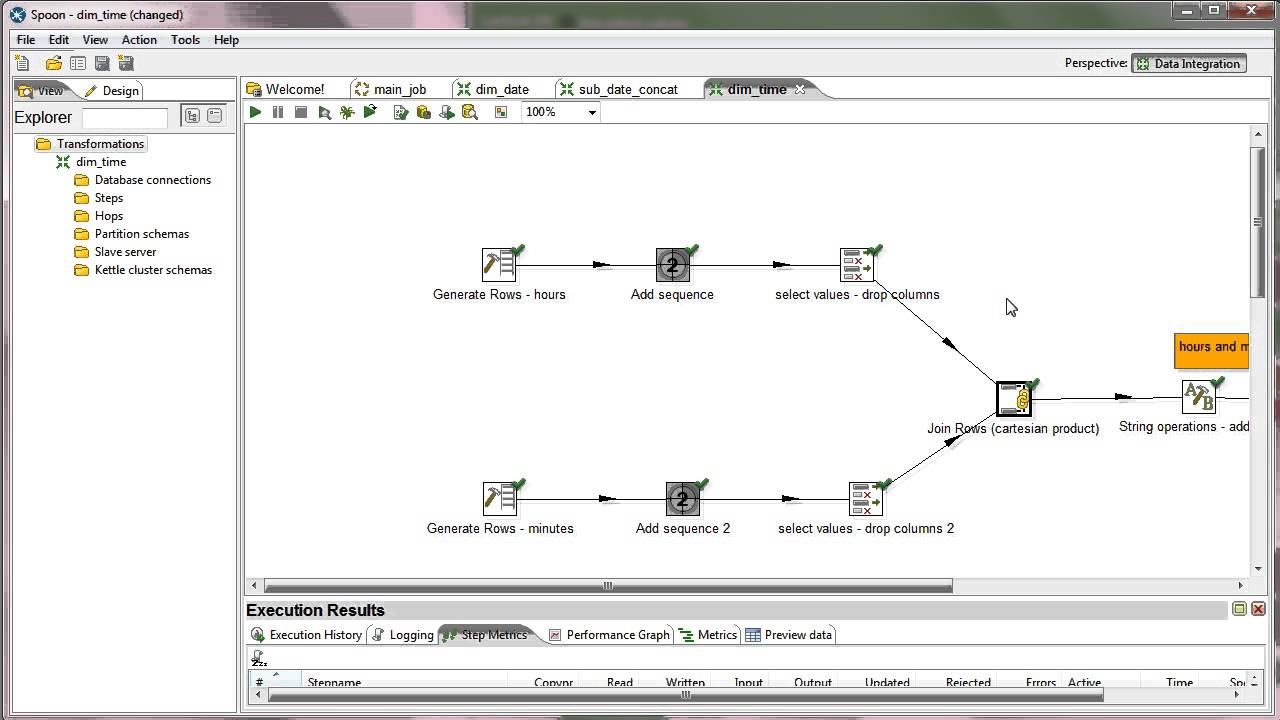

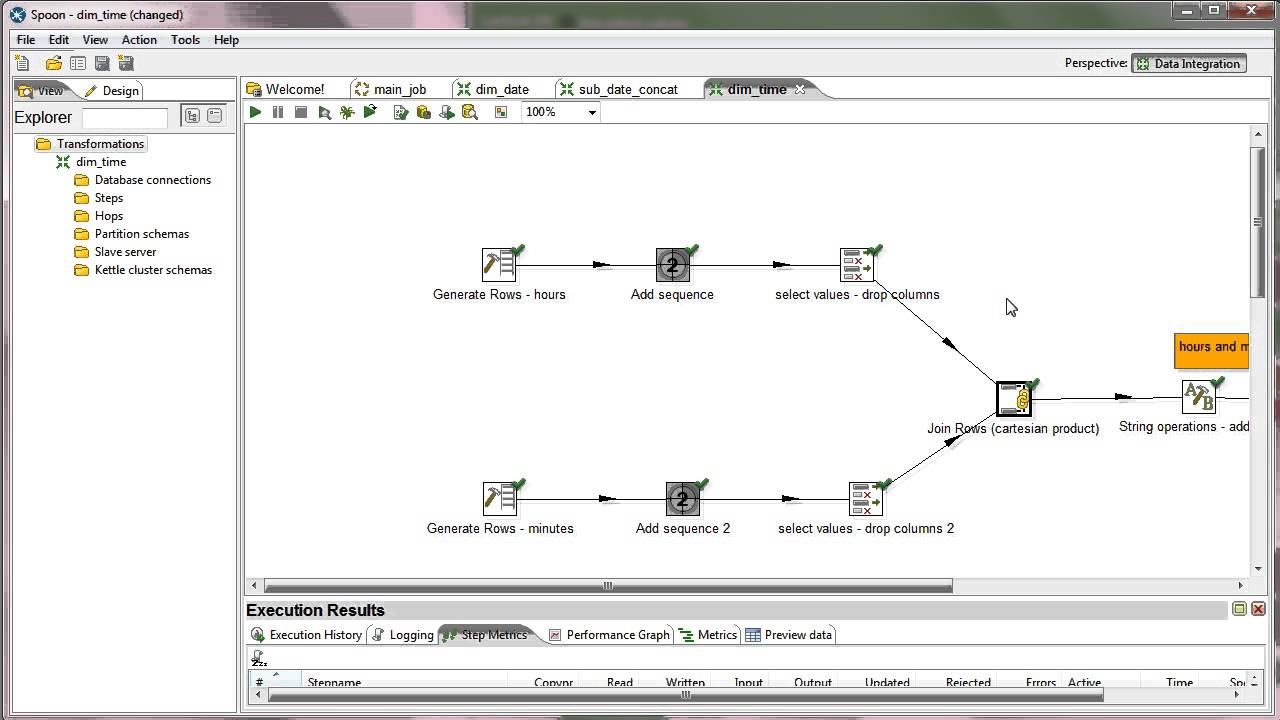

pentaho kettle tutorial data integration inflow the Cartesian step

Transformation (job entry) Last updated Oct 26, 2023 Save as PDF Table of contents The Transformation entry runs a previously-defined transformation within a job. This entry is the access point from your job to your ETL activity (transformation). One Transformation to get my data via query and the other Transformation to Loop over each row of my result Query. So this means my result set of Text files or CSV files should be 6. Next, we go to the next Transformation Execute For Every Row/Loop. Here we can see that we are Getting our Copied Rows Set by using the Get Rows from Result and.

Pentaho Data Integration (PDI) is a powerful open-source ETL tool that users can use to extract, transform, and load data from various sources into a target system. In PDI, a typical ETL workflow consists of Jobs and Transformations. A Transformation represents the data transformation part of an ETL workflow, and performs data manipulation. 1. Take a Set Variable at the Job Level [Initialize Loop] and then set a variable loop and assign the value to your initial value as shown below: In My case loop value = 1 Now next take a Transformation to get the variables and set the variables as shown below:

Loops in pentah Concept, How to apply, Understanding

Trans-Loop Transformation: Here, we need to increment that Loop (NEW_LOOP) by 1 and set the same variable which is NEW_LOOP. Simple Evaluation: Here, we are evaluating NEW_LOOP variable with 2. You can change this value based on your project needs. Here, this loop will run two times.If NEW_LOOP variable reaches value 2, then it will abort the job. Generally for implementing batch processing we use the looping concept provided by Pentaho in their ETL jobs. The loops in PDI are supported only on jobs (kjb) and it is not supported in transformations (ktr). Make data easy with Helical Insight. Helical Insight is the world's best open source business intelligence tool.