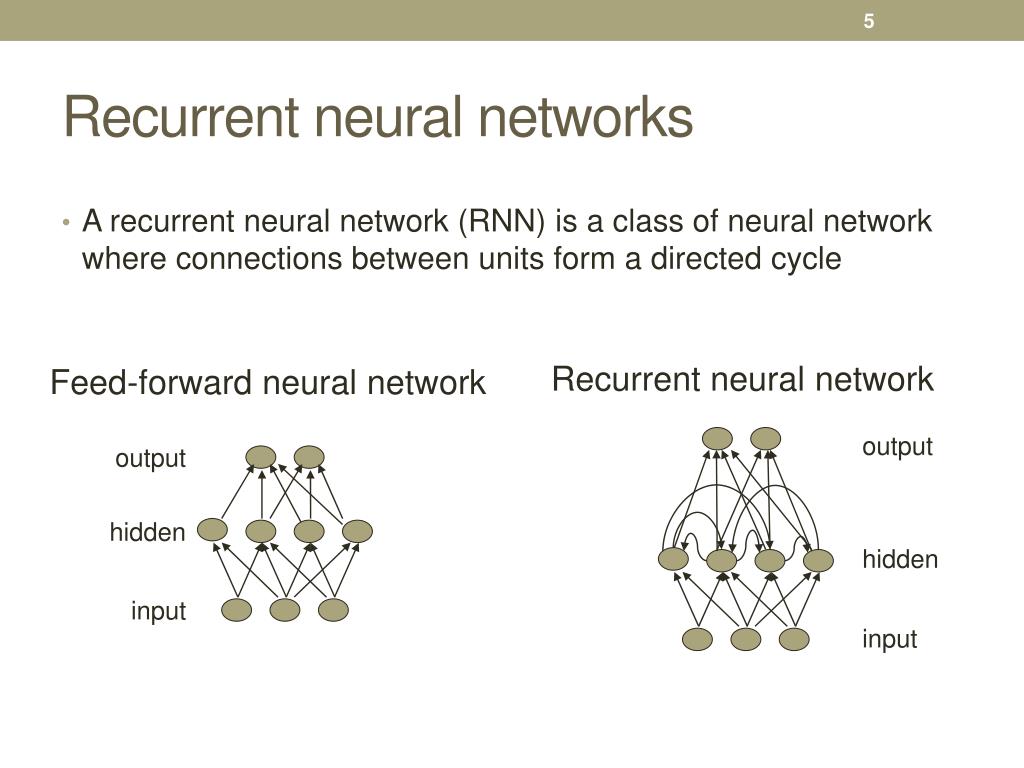

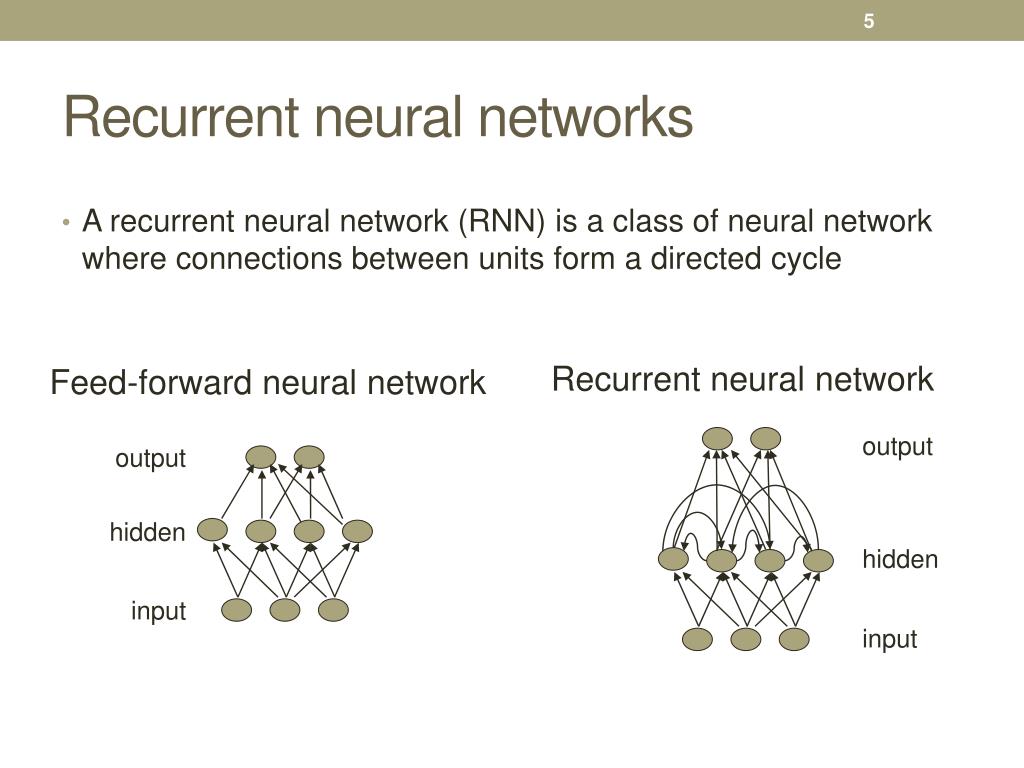

Recurrent Neural Network x RNN y We can process a sequence of vectors x by applying a recurrence formula at every time step: Notice: the same function and the same set of parameters are used at every time step. Fei-Fei Li & Justin Johnson & Serena Yeung Lecture 10 - 22 May 4, 2017 9.1 Recurrent Neural Networks A recurrent neural network (RNN) is any network that contains a cycle within its network connections, meaning that the value of some unit is directly, or indirectly, dependent on its own earlier outputs as an input. While powerful, such networks are difficult to reason about and to train.

PPT Generating Text with Recurrent Neural Networks PowerPoint

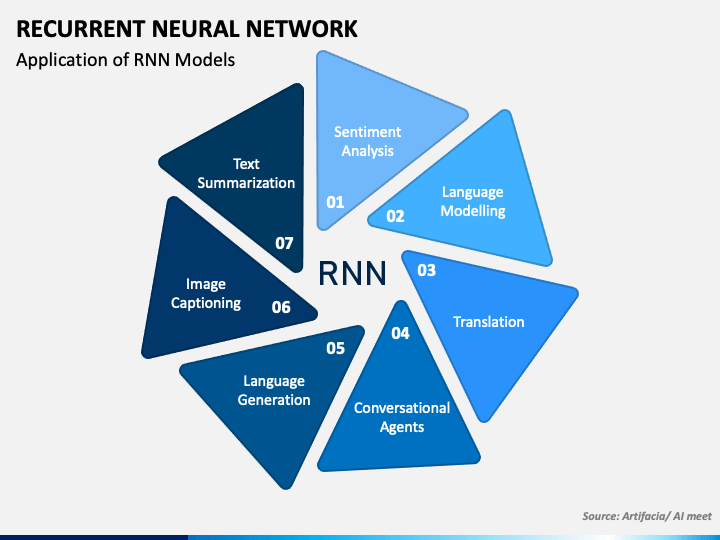

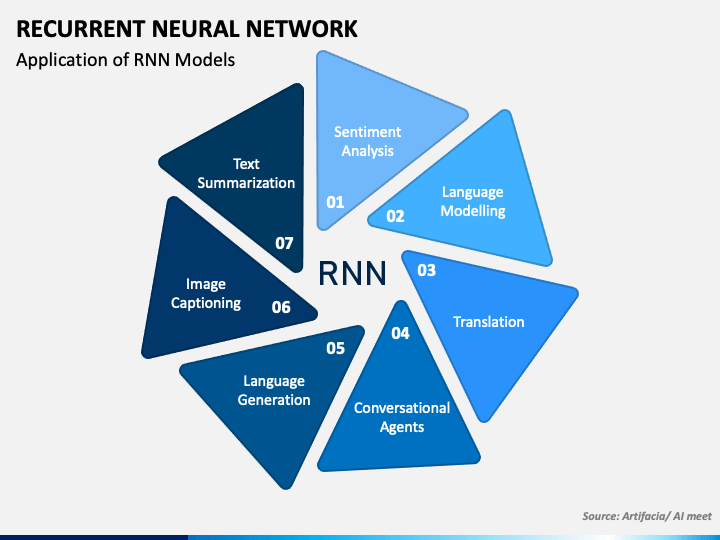

Recurrent Neural Network x RNN y We can process a sequence of vectors x by applying a recurrence formula at every time step: Notice: the same function and the same set of parameters are used at every time step. Fei-Fei Li, Ranjay Krishna, Danfei Xu Lecture 10 - 24 May 7, 2020 Last Time: Recurrent Neural Networks. Fei-Fei Li, Ranjay Krishna, Danfei Xu Lecture 11 - 5 May 06, 2021. Neural Image Caption Generation with Visual Attention", ICML 2015 z 0,0 person z 0,1 z 0,2 z 1,0 z 1,1 z 1,2 z 2,0 z 2,1 z 2,2 Decoder: y t = g V (y t-1, h t-1, c t) New context vector at every time step. Fei-Fei Li, Ranjay Krishna. Recurrent Neural Networks Recurrent Neural Networks (RNNs) o er several advantages: Non-linear hidden state updates allows high representational power. Can represent long term dependencies in hidden state (theoretically). Shared weights, can be used on sequences of arbitrary length. Slides by: Ian Shi Recurrent Neural Networks (RNNs) 5/27 The recurrent neural network works on the principle of saving the output of a layer and feeding this back to the input in order to predict the output of the layer. Now lets deep dive into this presentation and understand what is RNN and how does it actually work. Below topics are explained in this recurrent neural networks tutorial: 1.

Recurrent Neural Networks Rnns Input Layer Powerpoint Presentation

Explain Images with Multimodal Recurrent Neural Networks, Mao et al. Deep Visual-Semantic Alignments for Generating Image Descriptions, Karpathy and Fei-Fei Show and Tell: A Neural Image Caption Generator, Vinyals et al. Long-term Recurrent Convolutional Networks for Visual Recognition and Description, Donahue et al. This course introduces principles, algorithms, and applications of machine learning from the point of view of modeling and prediction. It includes formulation of learning problems and concepts of representation, over-fitting, and generalization. These concepts are exercised in supervised learning and reinforcement learning, with applications to images and to temporal sequences. L12-3 A Fully Recurrent Network The simplest form of fully recurrent neural network is an MLP with the previous set of hidden unit activations feeding back into the network along with the inputs: Note that the time t has to be discretized, with the activations updated at each time step. The time scale might correspond to the operation of real neurons, or for artificial systems Motivation. Both Multi-layer Perceptron and Convolutional Neural Networks take one datasample as the input and output one result, are categorised as feed-forward neural networks (FNNs) as they only pass data layer-by-layer and obtain one output for one input (e.g., an image in inputwith an output of a class label) There aremany time-series data.

Simple Explanation of Recurrent Neural Network (RNN) by Omar

recurrent neural networks. 1 Introduction It is well known that conventional feedforward neural networks can be used to approximate any spatially finite function given a (potentially very large) set of hidden nodes. That is, for functions which have a fixed input space there is always a way of encoding these functions as neural networks. This paper applies recurrent neural networks in the form of sequence modeling to predict whether a three-point shot is successful [13] 2. Action Classification in Soccer Videos with Long Short-Term Memory Recurrent Neural Networks [14] 2. GRU 1. A new type of RNN cell (Gated Feedback Recurrent Neural

A recurrent neural network (RNN) is a special type of artificial neural network adapted to work for time series data or data that involves sequences. Ordinary feedforward neural networks are only meant for data points that are independent of each other. However, if we have data in a sequence such that one data point depends upon the previous. 13. Recurrent Neural Network is basically a generalization of feed-forward neural network that has an internal memory. RNNs are a special kind of neural networks that are designed to effectively deal with sequential data. This kind of data includes time series (a list of values of some parameters over a certain period of time) text documents, which can be seen as a sequence of words, or audio.

Recurrent Neural Network PowerPoint Template PPT Slides

This is a neural network that is reading a page from Wikipedia. This result is a bit more detailed. The first line shows us if the neuron is active (green color) or not (blue color), while the next five lines say us, what the neural network is predicting, particularly, what letter is going to come next. A recurrent neural network (RNN) is a type of artificial neural network which uses sequential data or time series data. These deep learning algorithms are commonly used for ordinal or temporal problems, such as language translation, natural language processing (nlp), speech recognition, and image captioning; they are incorporated into popular applications such as Siri, voice search, and Google.