Leverage a Full Space to create a fun game using ARKit. struct HandAnchor A hand's position in a person's surroundings. struct HandSkeleton A collection of joints in a hand. A source of live data about the position of a person's hands and hand joints. Code Detect Body and Hand Pose with Vision Explore how the Vision framework can help your app detect body and hand poses in photos and video.

2020CV mobile hand tracking + Apple's ARKit YouTube

Hand tracking. Use the person's hand and finger positions as input for custom gestures and interactivity. Scene reconstruction. Build a mesh of the person's physical surroundings and incorporate it into your immersive spaces to support interactions. Image tracking. 1 Answer Sorted by: 2 It's possible that you can get pretty close positions for the fingers of a tracked body using ARKit 3's human body tracking feature (see Apple's Capturing Body Motion in 3D sample code), but you use ARBodyTrackingConfiguration for the human body tracking feature, and face tracking is not supported under that configuration. Provide a light temperature of around ~6500 Kelvin (D65)--similar with daylight. Avoid warm or any other tinted light sources. Set the object in front of a matte, middle gray background. Scan real-world objects with an iOS app The programming steps to scan and define a reference object that ARKit can use for detection are simple. Track the Position and Orientation of a Face When face tracking is active, ARKit automatically adds ARFaceAnchor objects to the running AR session, containing information about the user's face, including its position and orientation. (ARKit detects and provides information about only face at a time.

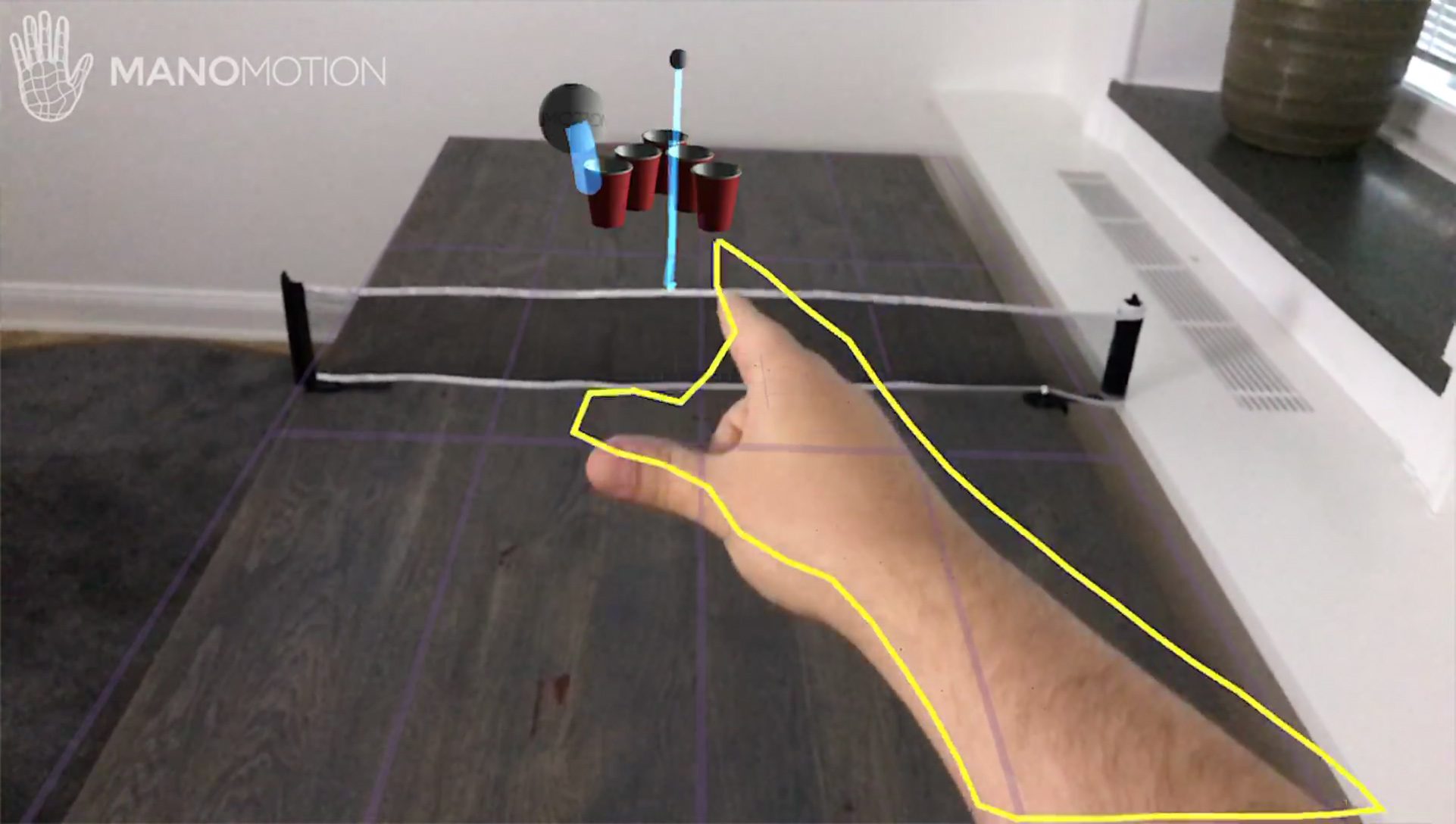

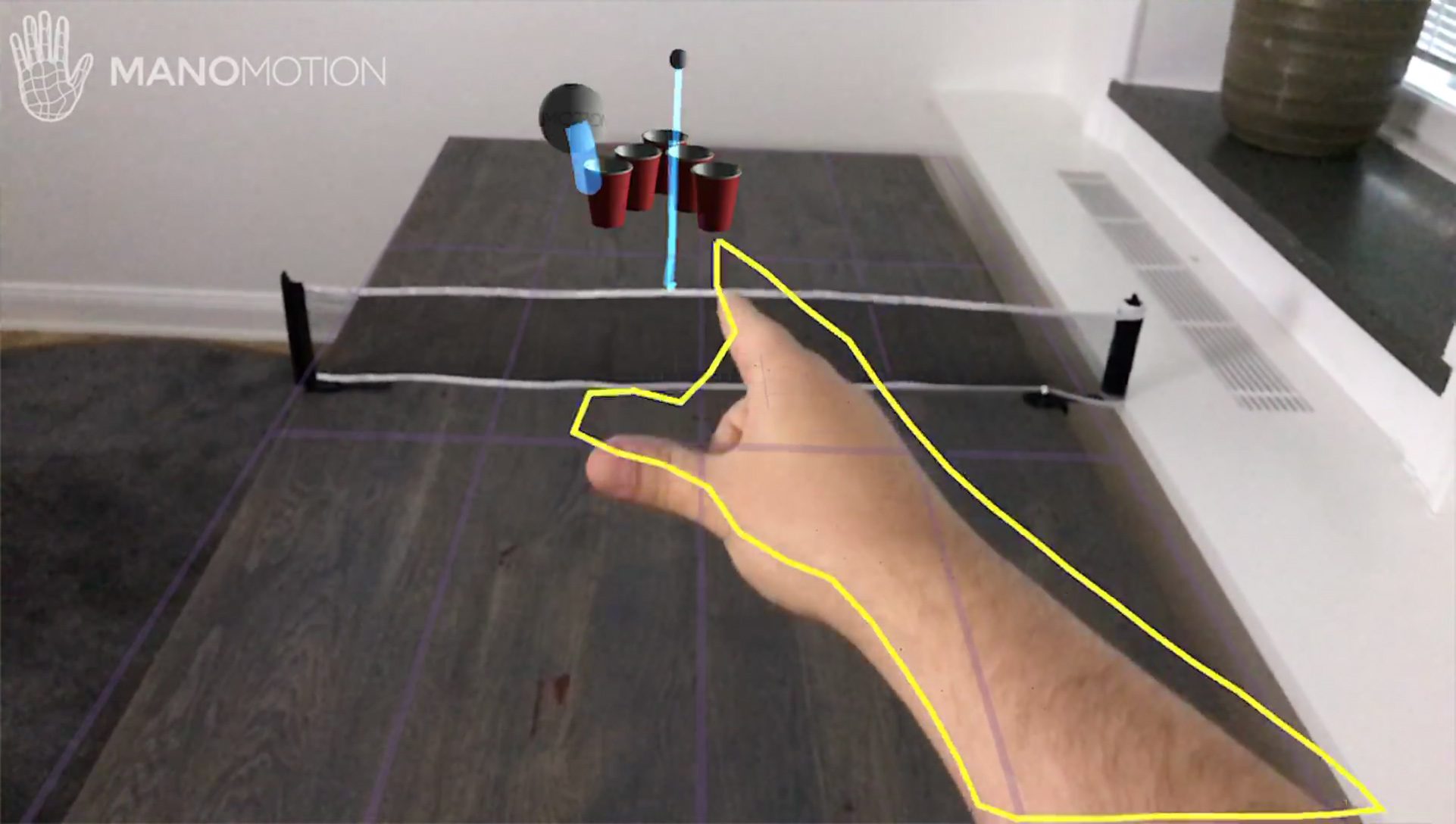

ManoMotion Brings Hand Gesture Input to Apple's ARKit Road to VR

ARKit from Apple is a really powerful tool that uses to analyze your environment and detect features from it. The power lies in the fact that you can use the detected features from the video to anchor virtual objects in the world and give the illusion they are real. Motion Capture Capture the motion of a person in real time with a single camera. By understanding body position and movement as a series of joints and bones, you can use motion and poses as an input to the AR experience — placing people at the center of AR. The basic requirement for any AR experience—and the defining feature of ARKit—is the ability to create and track a correspondence between the real-world space the user inhabits and a virtual space where you can model visual content. In ManoMotion's video, we can see the ARKit-driven app recognize the user's hand and respond to a flicking motion, which sends a ping-pong ball into a cup, replete with all of the spatial.

ManoMotion Introduces Apple ARKit Hand Gesture Support VRScout

ARKit is Apple's framework and software development kit that developers use to create augmented reality games and tools. ARKit was announced to developers during WWDC 2017, and demos showed. Neither the ARKit nor ARCore SDKs offer hand tracking support, though. Both companies expect you to use the touchscreen on your device to interact with the digital assets in your real-world.

Hand Pose 🙌. Hand tracking has implemented in many AR mockups, and also demoed for real within ARKit before; but these demos have been using private APIs that companies and people have made themselves. Here are some examples: 2020CV Implementing basic hand tracking back in 2017; Augmented Apple Card with Hand Tracking (Concept) Replies. In order to interact with 3D objects, the hand would likely need to be represented in 3D. However, Vision's hand pose estimation provides landmarks in 2D only. By combining some API, you may be able to get exactly what you're after. You would first use the x and y coordinates from the 2D landmarks in Vision's hand pose observations.

Body Tracking Example Using RealityKit, ARKit + SwiftUI // Coding on iPad Pro YouTube

AR Foundation supports 3D & 2D full body tracking, which includes hand transforms. However, if you're looking to track only a hand (with fingers, etc) then that is not currently supported by ARKit. You may want to look at Apple's Vision Framework, but we do not offer any integration with the Vision Framework. wetcircuit and Jelmer123 like this. 2022 UPDATED TUTORIAL: https://youtu.be/nBZ-dglGow0*** Access Source Code on Patreon: https://www.patreon.com/posts/60601004 ***In this video, I show you ste.