Building a deep learning model to generate human readable text using Recurrent Neural Networks (RNNs) and LSTM with TensorFlow and Keras frameworks in Python. Make RNNs in TensorFlow and Keras as generative models. Cleaning text and building TensorFlow input pipelines using tf.data API. Training LSTM network on text sequences. Download the Shakespeare dataset Read the data Process the text Run in Google Colab View source on GitHub Download notebook This tutorial demonstrates how to generate text using a character-based RNN. You will work with a dataset of Shakespeare's writing from Andrej Karpathy's The Unreasonable Effectiveness of Recurrent Neural Networks.

How to Build a Text Generator using TensorFlow 2 and Keras in Python The Python Code

textgenrnn is a Python 3 module on top of Keras / TensorFlow for creating char-rnn s, with many cool features: A modern neural network architecture which utilizes new techniques as attention-weighting and skip-embedding to accelerate training and improve model quality. Train on and generate text at either the character-level or word-level. Text Generators | Text Generation Using Python How to create a poet / writer using Deep Learning (Text Generation using Python)? Pranj52 Srivastava — Updated On July 19th, 2022 Introduction From short stories to writing 50,000 word novels, machines are churning out words like never before. Python for NLP: Deep Learning Text Generation with Keras Usman Malik This is the 21st article in my series of articles on Python for NLP. In the previous article, I explained how to use Facebook's FastText library for finding semantic similarity and to perform text classification. In this guide, we'll be building an Autoregressive Language Model to generate text. We'll be focusing on the practical and minimalistic/concise aspects of loading data, splitting it, vectorizing it, building a model, writing a custom callback and training/inference.

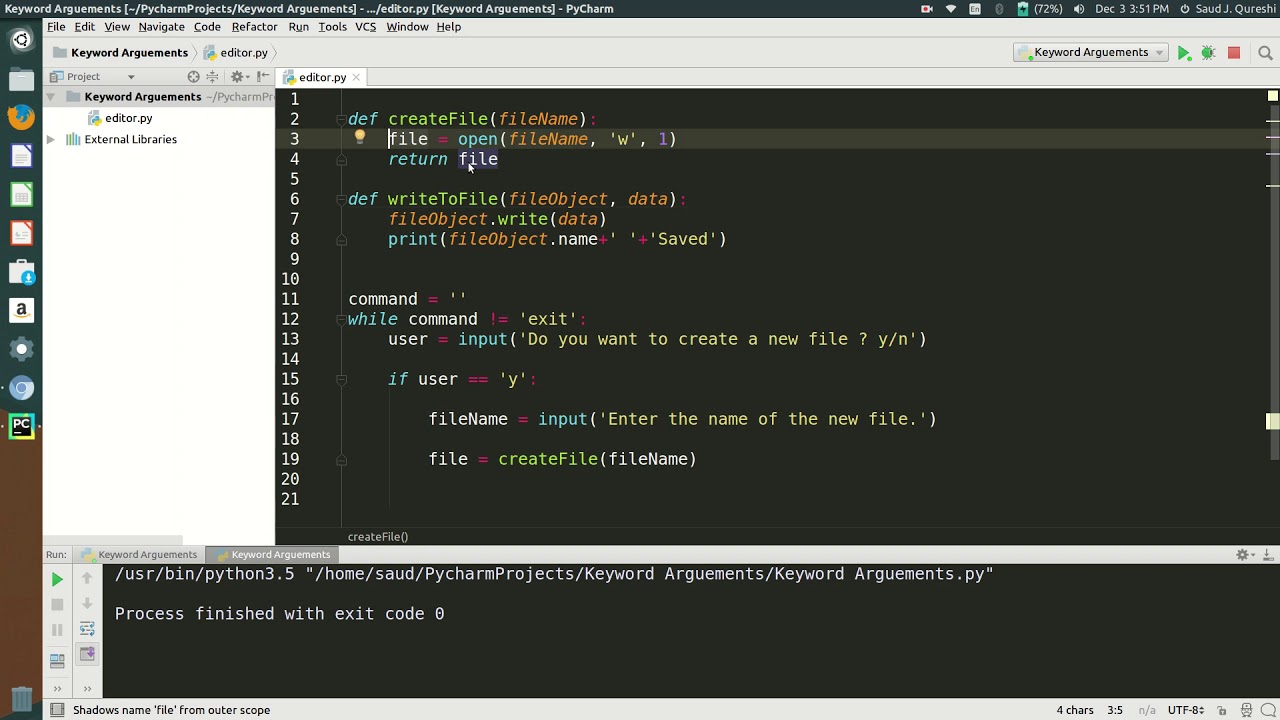

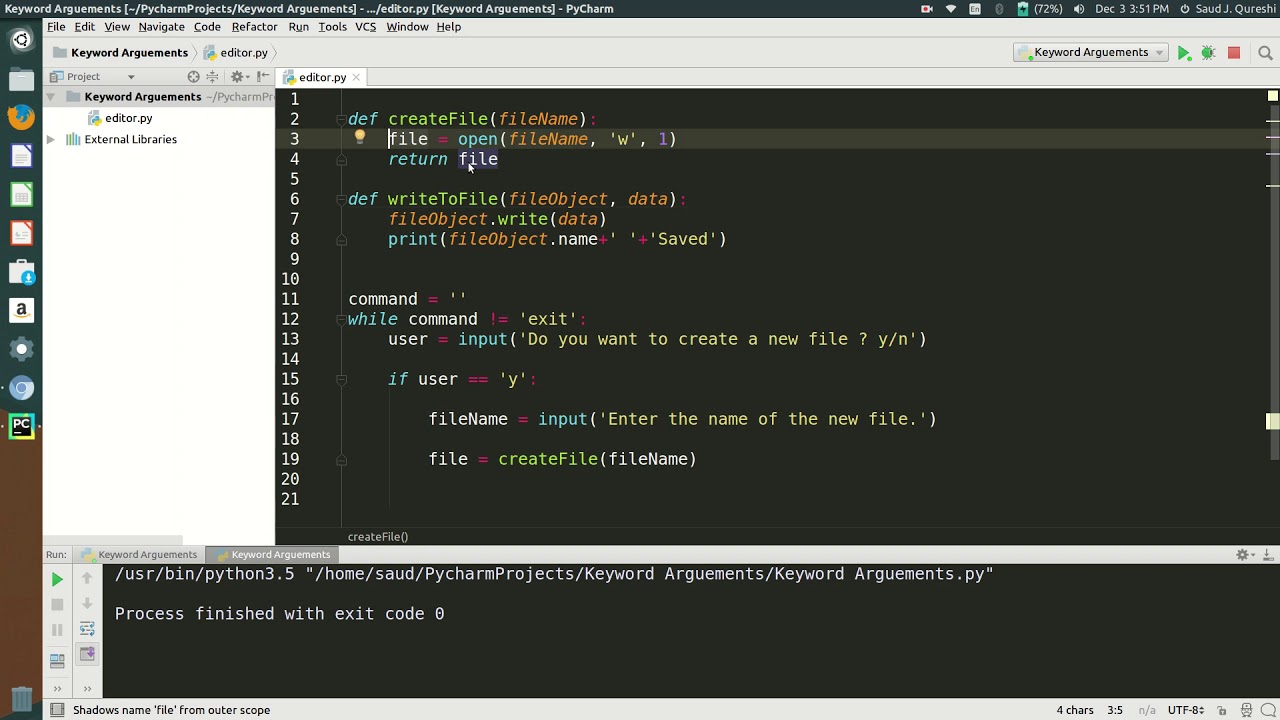

How to simple python text editor snoretro

1. Load and Tokenize Text Data To begin, we need a text corpus - a large and structured set of texts - to train our generator. You can use any text file you like, such as a novel, a collection of articles, or even your own writing. For this example, we will use the Alice in Wonderland text file. 4.Generate text function. Currently, our model only expects 128 sequences at a time. We can create a new model that only expects a batch_size=1. We can create a new model with this batch size, then load our saved model's weights. Then call .build () on the model, and then we create a function that generates a new text. Example text generation application. We will be building a simpler variation of this web app. What you will need. This tutorial assumes you already have Python 3.7+ installed and have some understanding of Language Models.Although the steps involved can be done outside of Jupyter, using a jupyter notebook is highly highly recommended.. We will be using PyTorch as our Deep Learning library of. Generate text. The simplest way to generate text with this model is to run it in a loop, and keep track of the model's internal state as you execute it. Each time you call the model you pass in some text and an internal state. The model returns a prediction for the next character and its new state.

Text Summarizer Using Python NLTK Library in Python Auto Text Summary Generator Using Python

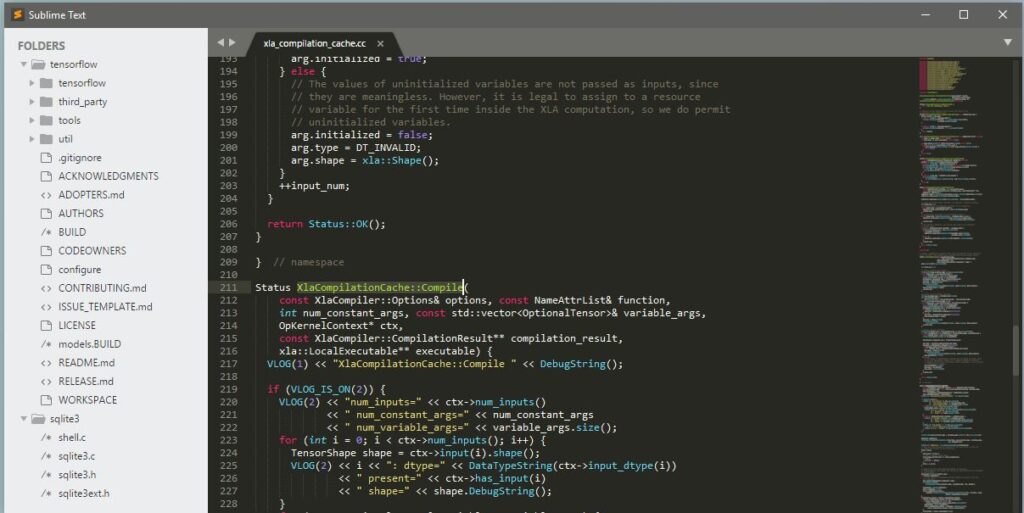

We need both PyTorch and Transformers libraries installed to build our text generation model. The setup instructions for PyTorch vary depending on your system, CUDA version (if any), and Python release. Fortunately, PyTorch has made a very easy to use guide here. Next up is HuggingFace's Transformers library. Code Generation. A Text Generation model, also known as a causal language model, can be trained on code from scratch to help the programmers in their repetitive coding tasks. One of the most popular open-source models for code generation is StarCoder, which can generate code in 80+ languages. You can try it here.

How to frame the problem of text sequences to a recurrent neural network generative model; How to develop an LSTM to generate plausible text sequences for a given problem; Kick-start your project with my new book Deep Learning for Natural Language Processing, including step-by-step tutorials and the Python source code files for all examples. Beginners Guide to Text Generation using LSTMs Python · New York Times Comments. Beginners Guide to Text Generation using LSTMs. Notebook. Input. Output. Logs. Comments (54) Run. 659.4s - GPU P100. history Version 16 of 16. License. This Notebook has been released under the Apache 2.0 open source license. Continue exploring. Input. 1 file.

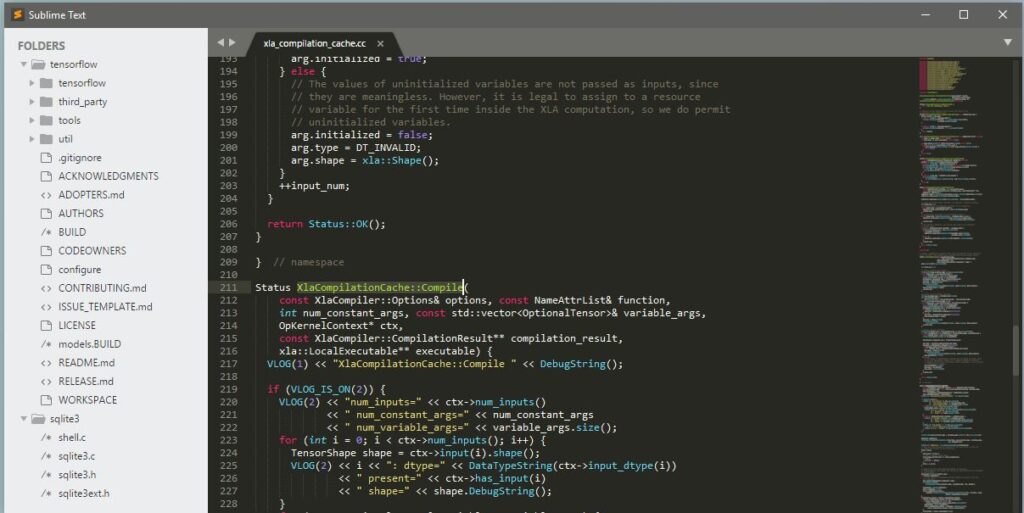

Best 8 Text Editors for Python Programming H2S Media

Learn how to build your own text generator in Python using OpenAI's GPT-2 framework GPT-2 is a state-of-the-art NLP framework - a truly incredible breakthrough We will learn how it works and then implements our own text generator using GPT-2 Introduction Text-Generating AI in Python NeuralNine · Follow 7 min read · Jan 16, 2021 1 Recurrent neural networks are very powerful when it comes to processing sequential data like text. They are a.